🚀 [Weekly Top 10] Petabyte Scale, Netflix Impressions, Grab AI

It emphasizes the importance of a proactive approach to data quality, shifting responsibility to data producers.

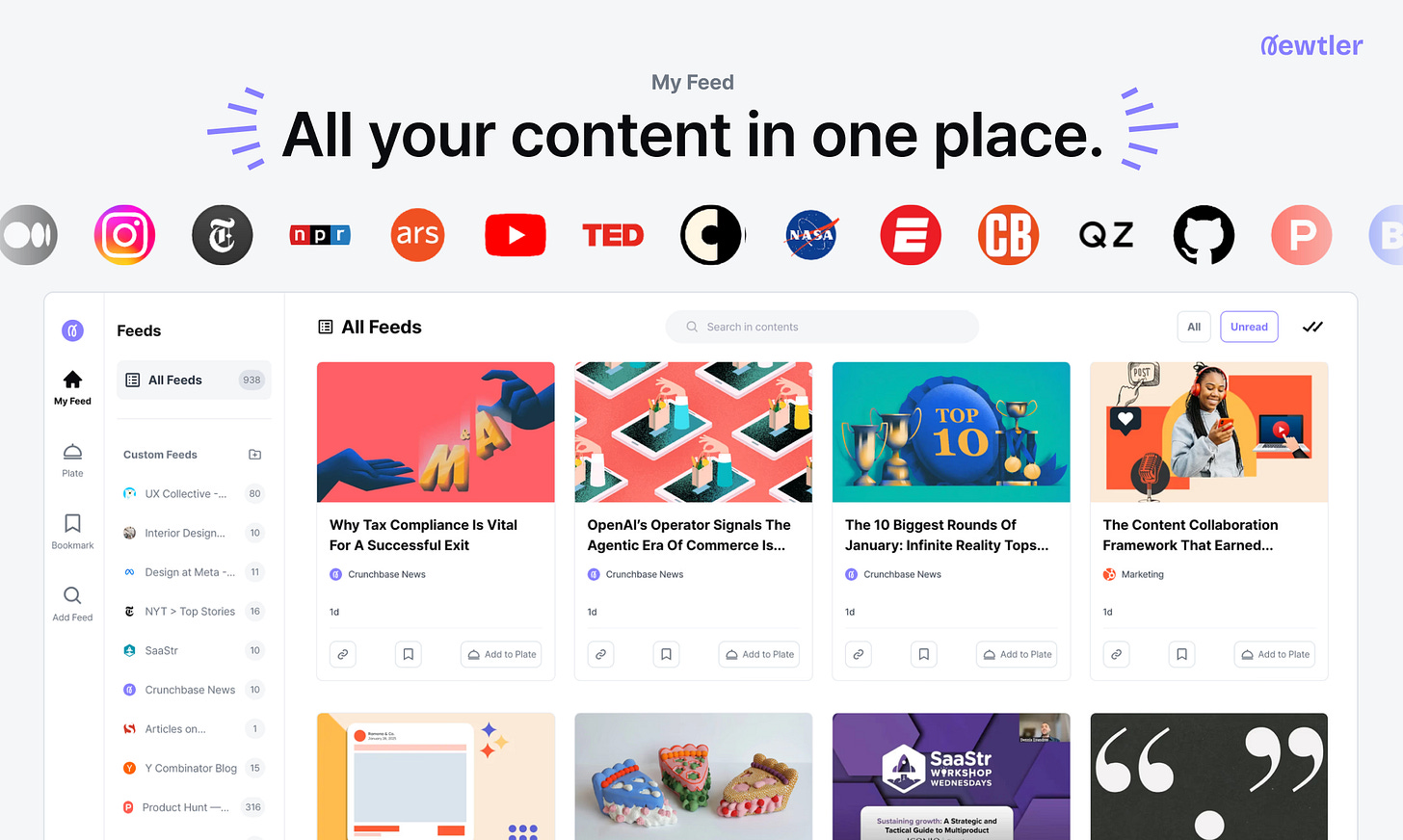

✅ This is a newsletter operated by newtler. ‘newtler’ is a social-based content reader service that allows you to receive new posts from any media of your choice, not just tech blogs, all in one place! 😎

=

Contents

Weekly Tech | Feb 25, 2025 | 10 Contents

The Quest to Understand Metric Movements

AI enhancements to Adaptive Acceptance

Searching for the cause of hung tasks in the Linux kernel

Data Quality at Petabyte Scale: Building Trust in the Data Lifecycle

Estimating Incremental Lift in Customer Value (Delta CV) using Synthetic Control

Introducing Impressions at Netflix

Protecting user data through source code analysis at scale

Revenue Automation Series: Building Revenue Data Pipeline

Grab AI Gateway: Connecting Grabbers to Multiple GenAI Providers

How to debug code with GitHub Copilot

=

1. The Quest to Understand Metric Movements

Stories by Pinterest Engineering on Medium

📣️ newtler Comments

This resource is valuable for anyone trying to understand fluctuations in key metrics.

📄 Summary

It outlines three approaches for root-cause analysis of metric movements: Slice and Dice, General Similarity, and Experiment Effects.

The Slice and Dice method involves breaking down metrics into segments to identify significant contributors to changes.

General Similarity examines correlations between metrics to find related movements, while Experiment Effects analyzes the impact of A/B tests on metrics.

=

2. AI enhancements to Adaptive Acceptance

Stripe Blog

📣️ newtler Comments

This is recommended for online businesses struggling with false declines in transactions.

📄 Summary

False declines cost US online retailers an estimated $81 billion in lost sales, highlighting the importance of addressing this issue.

Adaptive Acceptance utilizes advanced AI to optimize payment requests and recover falsely declined transactions, achieving a 70% increase in precision.

Recent improvements in model training efficiency allow for rapid updates, enabling the system to adapt to emerging patterns in transaction data.

=

3. Searching for the cause of hung tasks in the Linux kernel

The Cloudflare Blog

📣️ newtler Comments

This post is valuable for understanding and troubleshooting hung task warnings in Linux.

📄 Summary

It explains the hung task warning, its causes, and the significance of the TASK_UNINTERRUPTIBLE state.

The Linux kernel uses a special thread, khungtaskd, to monitor processes in the D state and log warnings based on configurable thresholds.

Real-world examples illustrate how to identify the root causes of hung tasks, emphasizing the importance of analyzing stack traces and system metrics.

=

4. Data Quality at Petabyte Scale: Building Trust in the Data Lifecycle

Glassdoor Engineering Blog - Medium

📣️ newtler Comments

This article is essential for organizations aiming to enhance their data quality practices.

📄 Summary

It emphasizes the importance of a proactive approach to data quality, shifting responsibility to data producers.

The implementation of data contracts and static code analysis helps prevent data issues before they reach production.

Real-time monitoring and anomaly detection further ensure data integrity and reliability throughout the data lifecycle.

=

5. Estimating Incremental Lift in Customer Value (Delta CV) using Synthetic Control

The PayPal Technology Blog - Medium

📣️ newtler Comments

This is valuable for understanding how user actions impact customer value at PayPal.

📄 Summary

Delta CV measures the incremental profit margin from product adoption or user actions over the first year.

The methodology involves causal inference and synthetic control to compare adopters with non-adopters based on transactional features.

Delta CV provides insights into user engagement and the cumulative effects of product adoptions, while also highlighting potential biases and limitations in the estimation process.

=

6. Introducing Impressions at Netflix

Netflix TechBlog - Medium

📣️ newtler Comments

This article is valuable for understanding how Netflix enhances user experience through impression tracking.

📄 Summary

Impression history is crucial for personalized recommendations, frequency capping, and monitoring new content interactions.

The architecture involves a Source-of-Truth dataset that processes raw impression events using Apache Kafka and Iceberg for real-time and historical data management.

Future improvements focus on schema management for raw events, automating performance tuning, and enhancing data quality alerts to ensure data integrity.

=

7. Protecting user data through source code analysis at scale

Engineering at Meta

📣️ newtler Comments

This information is crucial for understanding how Meta combats unauthorized scraping.

📄 Summary

Meta's Anti-Scraping team uses static analysis tools to detect potential scraping vectors across its platforms.

The tools track data flow from user-controlled parameters to returned data, identifying vulnerabilities that scrapers could exploit.

While static analysis helps mitigate scraping risks, it cannot catch all issues due to the complexity of unauthorized scraping methods.

=

8. Revenue Automation Series: Building Revenue Data Pipeline

Yelp Engineering and Product Blog

📣️ newtler Comments

This article is essential for understanding the complexities of automating revenue recognition processes.

📄 Summary

It outlines the journey of building a Revenue Data Pipeline to integrate with a Revenue Recognition SaaS solution, enhancing efficiency.

The article discusses the challenges faced in translating ambiguous business requirements into engineering-friendly terms and the solutions implemented.

It highlights the technical choices made for data processing and the use of Spark ETL to manage complex data transformations effectively.

=

9. Grab AI Gateway: Connecting Grabbers to Multiple GenAI Providers

Grab Tech

📣️ newtler Comments

This is essential for anyone looking to leverage Generative AI technologies effectively.

📄 Summary

Grab AI Gateway provides centralized access to multiple AI providers, simplifying authentication and resource management.

It enables experimentation with various AI models while minimizing costs through a shared capacity pool.

The gateway ensures compliance with privacy standards through thorough auditing and offers a unified API interface for ease of use.

=

10. How to debug code with GitHub Copilot

The GitHub Blog

📣️ newtler Comments

This guide is essential for developers looking to enhance their debugging process with AI assistance.

📄 Summary

GitHub Copilot can streamline debugging by providing real-time suggestions, analyzing code, and generating test cases.

It offers various features such as interactive assistance in Copilot Chat, contextual help in IDEs, and support for pull requests.

Best practices include providing clear context, refining prompts, and using slash commands to optimize the debugging workflow.

=

About newtler

This is a newsletter operated by newtler. ‘newtler’ is a social-based content reader service that allows you to receive new posts from any media of your choice, not just tech blogs, all in one place! 😎

=